本文是基於 LLama 2是由Meta 開源的大語言模型,透過LocalAI 來整合LLama2 來演示Semantic kernel(簡稱SK) 和 本地大模型的整合範例。

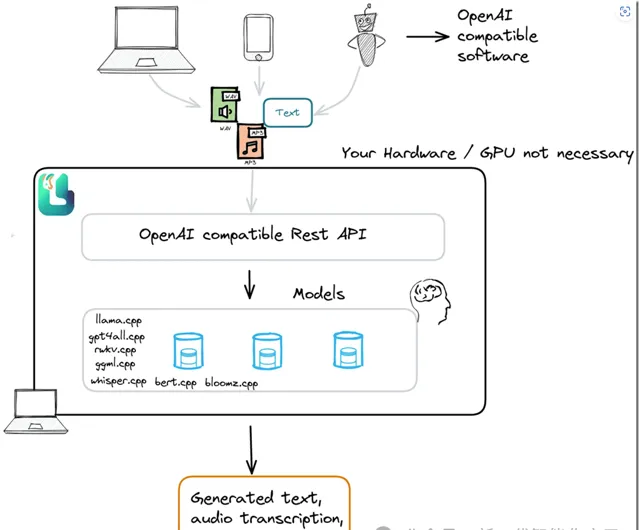

SK 可以支持各種大模型,在官方範例中多是OpenAI 和 Azure OpenAI service 的GPT 3.5+。今天我們就來看一看如何把SK 和 本地部署的開源大模型整合起來。我們使用MIT協定的開源計畫「LocalAI「:https://github.com/go-skynet/LocalAI。

LocalAI 是一個本地推理框架,提供了 RESTFul API,與 OpenAI API 規範相容。它允許你在消費級硬體上本地或者在自有伺服器上執行 LLM(和其他模型),支持與 ggml 格式相容的多種模型家族。不需要 GPU。LocalAI 使用 C++ 繫結來最佳化速度。它基於用於音訊轉錄的 llama.cpp、gpt4all、rwkv.cpp、ggml、whisper.cpp 和用於嵌入的 bert.cpp。

可參考官方 Getting Started 進行部署,透過LocalAI我們將本地部署的大模型轉換為OpenAI的格式,透過SK 的OpenAI 的Connector 存取,這裏需要做的是把openai的Endpoint 指向 LocalAI,這個我們可以透過一個自訂的HttpClient來完成這項工作,例如下面的這個範例:

internal class OpenAIHttpclientHandler : HttpClientHandler

{

private KernelSettings _kernelSettings;

public OpenAIHttpclientHandler(KernelSettings settings)

{

this._kernelSettings = settings;

}

protected override async Task<HttpResponseMessage> SendAsync(HttpRequestMessage request, CancellationToken cancellationToken)

{

if (request.RequestUri.LocalPath == "/v1/chat/completions")

{

UriBuilder uriBuilder = new UriBuilder(request.RequestUri)

{

Scheme = this._kernelSettings.Scheme,

Host = this._kernelSettings.Host,

Port = this._kernelSettings.Port

};

request.RequestUri = uriBuilder.Uri;

}

return await base.SendAsync(request, cancellationToken);

}

}

上面我們做好了所有的準備工作,接下來就是要把所有的元件組裝起來,讓它們協同工作。因此開啟Visual studio code 建立一個c# 計畫sk-csharp-hello-world,其中Program.cs 內容如下:

using System.Reflection;

using config;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

using Microsoft.SemanticKernel.PromptTemplates.Handlebars;

using Plugins;

var kernelSettings = KernelSettings.LoadSettings();

var handler = new OpenAIHttpclientHandler(kernelSettings);

IKernelBuilder builder = Kernel.CreateBuilder();

builder.Services.AddLogging(c => c.SetMinimumLevel(LogLevel.Information).AddDebug());

builder.AddChatCompletionService(kernelSettings,handler);

builder.Plugins.AddFromType<LightPlugin>();

Kernel kernel = builder.Build();

// Load prompt from resource

using StreamReader reader = new(Assembly.GetExecutingAssembly().GetManifestResourceStream("prompts.Chat.yaml")!);

KernelFunction prompt = kernel.CreateFunctionFromPromptYaml(

reader.ReadToEnd(),

promptTemplateFactory: new HandlebarsPromptTemplateFactory()

);

// Create the chat history

ChatHistory chatMessages = [];

// Loop till we are cancelled

while (true)

{

// Get user input

System.Console.Write("User > ");

chatMessages.AddUserMessage(Console.ReadLine()!);

// Get the chat completions

OpenAIPromptExecutionSettings openAIPromptExecutionSettings = new()

{

};

var result = kernel.InvokeStreamingAsync<StreamingChatMessageContent>(

prompt,

arguments: new KernelArguments(openAIPromptExecutionSettings) {

{ "messages", chatMessages }

});

// Print the chat completions

ChatMessageContent? chatMessageContent = null;

await foreach (var content in result)

{

System.Console.Write(content);

if (chatMessageContent == null)

{

System.Console.Write("Assistant > ");

chatMessageContent = new ChatMessageContent(

content.Role ?? AuthorRole.Assistant,

content.ModelId!,

content.Content!,

content.InnerContent,

content.Encoding,

content.Metadata);

}

else

{

chatMessageContent.Content += content;

}

}

System.Console.WriteLine();

chatMessages.Add(chatMessageContent!);

}

首先,我們做的第一件事是匯入一堆必要的名稱空間,使一切正常(第 1 行到第 9 行)。

然後,我們建立一個內核構建器的例項(透過模式,而不是因為它是建構函式),這將有助於塑造我們的內核。

IKernelBuilder builder = Kernel.CreateBuilder();

你需要知道每時每刻都在發生什麽嗎?答案是肯定的!讓我們在內核中添加一個日誌。我們在第14行添加了日誌的支持。

我們想使用Azure,OpenAI中使用Microsoft的AI模型,以及我們LocalAI 整合的本地大模型,我們可以將它們包含在我們的內核中。正如我們在15行看到的那樣:

internal static class ServiceCollectionExtensions

{

/// <summary>

/// Adds a chat completion service to the list. It can be either an OpenAI or Azure OpenAI backend service.

/// </summary>

/// <param name="kernelBuilder"></param>

/// <param name="kernelSettings"></param>

/// <exception cref="ArgumentException"></exception>

internal static IKernelBuilder AddChatCompletionService(this IKernelBuilder kernelBuilder, KernelSettings kernelSettings, HttpClientHandler handler)

{

switch (kernelSettings.ServiceType.ToUpperInvariant())

{

case ServiceTypes.AzureOpenAI:

kernelBuilder = kernelBuilder.AddAzureOpenAIChatCompletion(kernelSettings.DeploymentId, endpoint: kernelSettings.Endpoint, apiKey: kernelSettings.ApiKey, serviceId: kernelSettings.ServiceId, kernelSettings.ModelId);

break;

case ServiceTypes.OpenAI:

kernelBuilder = kernelBuilder.AddOpenAIChatCompletion(modelId: kernelSettings.ModelId, apiKey: kernelSettings.ApiKey, orgId: kernelSettings.OrgId, serviceId: kernelSettings.ServiceId);

break;

case ServiceTypes.HunyuanAI:

kernelBuilder = kernelBuilder.AddOpenAIChatCompletion(modelId: kernelSettings.ModelId, apiKey: kernelSettings.ApiKey, httpClient: new HttpClient(handler));

break;

case ServiceTypes.LocalAI:

kernelBuilder = kernelBuilder.AddOpenAIChatCompletion(modelId: kernelSettings.ModelId, apiKey: kernelSettings.ApiKey, httpClient: new HttpClient(handler));

break;

default:

throw new ArgumentException($"Invalid service type value: {kernelSettings.ServiceType}");

}

return kernelBuilder;

}

}

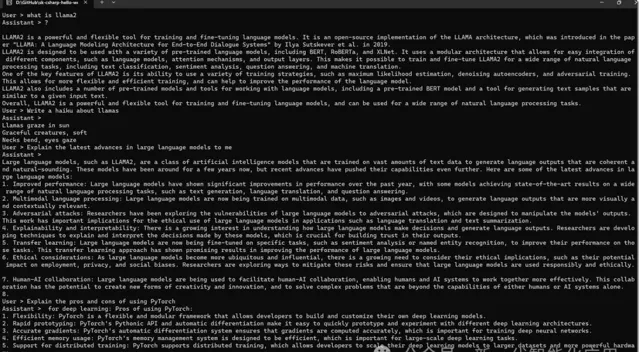

接下來開啟一個聊天迴圈,使用SK的流式傳輸 InvokeStreamingAsync,如第42行到46行程式碼所示,執行起來就可以體驗下列的效果:

本文範例原始碼: https://github.com/geffzhang/sk-csharp-hello-world

參考文章:

Docker部署LocalAI 實作本地私有化 文本轉語音(TTS) 語音轉文本 GPT功能 | Mr.Pu 個站部落格 (putianhui.cn)

LocalAI 自托管、社群驅動的本地 OpenAI API 相容替代方案