前言

目前浏览器不支持rtsp协议,常规的解决方案是将rtsp流转成其他浏览器支持的格式才能在Web页面中播放。这些方案因为多一层解码转码会产生一定的延迟,在一些实时性要求比较高的场景下并不适用。而通过Webassembly技术,我们可以将一部分工作分担到浏览器来减少延迟。

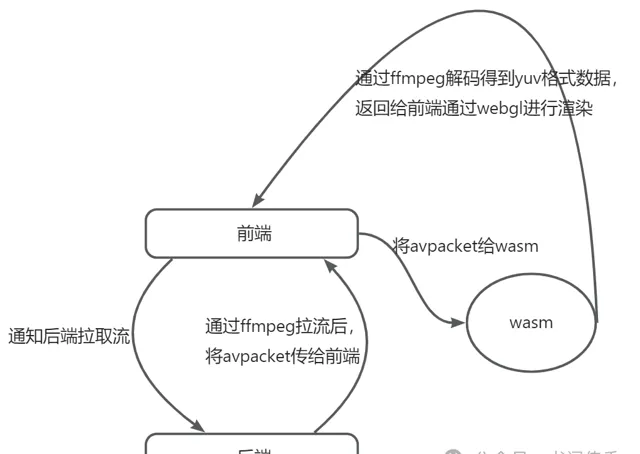

方案设计

后端拉取rtsp流,获取原始数据包,通过websocket将数据包传给前端,在前端通过webassembly技术来进行解码,最后通过webgl来播放。其实就是把解码的工作放在前端来做,目前方案是能走通的,就是效果还在优化中。。。

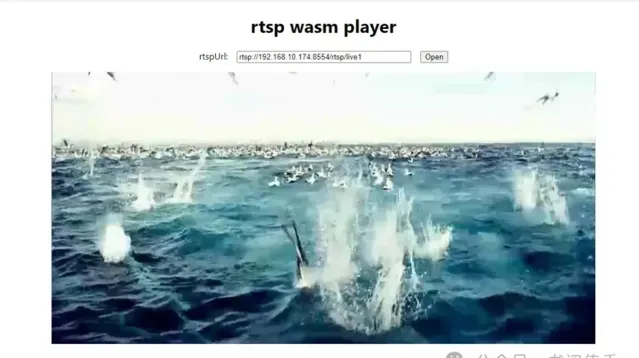

目前实现的效果图

WASM部分

ps: 本文编译wasm环境

ubuntu22.04

emscripten 3.1.55

ffmpeg 4.4.4

Emscripten工具链

Emscripten可以将c/c++代码编译成wasm

安装emsdk

# Get the emsdk repogitclone https://github.com/emscripten-core/emsdk.git# Enter that directorycdemsdk# Fetch the latest version of the emsdk (not needed the first time you clone)gitpull# Download and install the latest SDK tools../emsdkinstall latest# Make the "latest" SDK "active" for the current user. (writes .emscripten file)./emsdkactivate latest# Activate PATH and other environment variables in the current terminalsource./emsdk_env.sh

ps: ./emsdk activate latest和source ./emsdk_env.sh指令只能在当前命令行使用,每次新开一个命令行都要重新执行才能生效

编译ffmpeg

由于我们的wasm中要用到ffmpeg的代码,所以需要先将ffmpeg编译成库文件,才能正常链接

去官网下载ffmpeg的源码并解压,然后在ffmpeg的目录里面执行指令

emconfigure ./configure --cc="emcc" --cxx="em++" --ar="emar" --ranlib=emranlib \--prefix=../ffmpeg-emcc --enable-cross-compile --target-os=none --arch=x86_64 --cpu=generic \--disable-ffplay --disable-ffprobe --disable-asm --disable-doc --disable-devices --disable-pthreads --disable-w32threads --disable-network \--disable-hwaccels --disable-parsers --disable-bsfs --disable-debug --disable-protocols --disable-indevs --disable-outdevs --enable-protocol=file \--disable-strippingemmake makeemmake make install

这里禁用了很多wasm中不需要的模块,emconfigure和emmake都是emscripten提供的指令,类似于configure和make

ps: ffmpeg的版本不能太高,最新版本的ffmpeg已经没有./configure了

测试ffmpeg库

通过ffmpeg_test.cpp简单测试是否能链接到库文件

extern"C" {#include<libavcodec/avcodec.h>}intmain(){unsigned codecVer = avcodec_version();printf("avcodec version is: %d\n", codecVer);return0;}

编译和运行的指令,这里编译后会得到wasm、js、html三个文件

emccffmpeg_test.cpp ./lib/libavcodec.a \ -I "./include" \ -o ./test.htmlemrun--no_browser --port 8090 test.html

访问test.html后可以正常输出,说明库文件没问题

编写解码器

解码器web_decoder.cpp代码如下

#include<emscripten/emscripten.h>#ifdef __cplusplusextern"C" {#endif#include<libavcodec/avcodec.h>#include<libavformat/avformat.h>#include<libavutil/imgutils.h>#include<libswscale/swscale.h>#include<libswresample/swresample.h>typedefstruct { AVCodecContext *codec_ctx; // 用于解码 AVPacket *raw_pkt; // 存储js传递进来的pkt. AVFrame *decode_frame; // 存储解码成功后的YUV数据.structSwsContext *sws_ctx;// 格式转换,有些解码后的数据不一定是YUV格式的数据uint8_t *sws_data; AVFrame *yuv_frame; // 存储解码成功后的YUV数据,ffmpeg解码成功后的数据不一定是 YUV420Puint8_t *js_buf;unsigned js_buf_len;uint8_t *yuv_buffer;} JSDecodeHandle, *LPJSDecodeHandle;LPJSDecodeHandle EMSCRIPTEN_KEEPALIVE initDecoder(){auto handle = (LPJSDecodeHandle) malloc(sizeof(JSDecodeHandle));memset(handle, 0, sizeof(JSDecodeHandle)); handle->raw_pkt = av_packet_alloc(); handle->decode_frame = av_frame_alloc();const AVCodec *codec = avcodec_find_decoder(AV_CODEC_ID_H264);if (!codec) {fprintf(stderr, "Codec not found\n"); } handle->codec_ctx = avcodec_alloc_context3(codec);if (!handle->codec_ctx) {fprintf(stderr, "Could not allocate video codec context\n"); }//handle->c->thread_count = 5;if (avcodec_open2(handle->codec_ctx, codec, nullptr) < 0) {fprintf(stderr, "Could not open codec\n"); }// 我们最大支持到1920 * 1080,保存解码后的YUV数据,然后返回给前端!int max_width = 1920;int max_height = 1080; handle->yuv_buffer = static_cast<uint8_t *>(malloc(max_width * max_height * 3 / 2));fprintf(stdout, "ffmpeg h264 decode init success.\n");return handle; }uint8_t *EMSCRIPTEN_KEEPALIVE GetBuffer(LPJSDecodeHandle handle, int len){if (handle->js_buf_len < len) {if (handle->js_buf) free(handle->js_buf); handle->js_buf = static_cast<uint8_t *>(malloc(len * 2));memset(handle->js_buf, 0, len * 2); // 这句很重要! handle->js_buf_len = len * 2; }return handle->js_buf; }int EMSCRIPTEN_KEEPALIVE Decode(LPJSDecodeHandle handle, int len){ handle->raw_pkt->data = handle->js_buf; handle->raw_pkt->size = len;int ret = avcodec_send_packet(handle->codec_ctx, handle->raw_pkt);if (ret < 0) {fprintf(stderr, "Error sending a packet for decoding\n"); // 0x00 00 00 01return-1; } ret = avcodec_receive_frame(handle->codec_ctx, handle->decode_frame); // 这句话不是每次都成功的.if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {fprintf(stderr, "EAGAIN -- ret:%d -%d -%d -%s\n", ret, AVERROR(EAGAIN), AVERROR_EOF, av_err2str(ret));return-1; } elseif (ret < 0) {fprintf(stderr, "Error during decoding\n");return-1; }return ret; }int EMSCRIPTEN_KEEPALIVE GetWidth(LPJSDecodeHandle handle){return handle->decode_frame->width; }int EMSCRIPTEN_KEEPALIVE GetHeight(LPJSDecodeHandle handle){return handle->decode_frame->height; }uint8_t *EMSCRIPTEN_KEEPALIVE GetRenderData(LPJSDecodeHandle handle){int width = handle->decode_frame->width;int height = handle->decode_frame->height;bool sws_trans = false; // 我们确保得到的数据格式是YUV.if (handle->decode_frame->format != AV_PIX_FMT_YUV420P) { sws_trans = true;fprintf(stderr, "need transfer :%d\n", handle->decode_frame->format); } AVFrame *new_frame = handle->decode_frame;if (sws_trans) {if (handle->sws_ctx == nullptr) { handle->sws_ctx = sws_getContext(width, height, (enum AVPixelFormat) handle->decode_frame->format, // in width, height, AV_PIX_FMT_YUV420P, // out SWS_BICUBIC, nullptr, nullptr, nullptr); handle->yuv_frame = av_frame_alloc(); handle->yuv_frame->width = width; handle->yuv_frame->height = height; handle->yuv_frame->format = AV_PIX_FMT_YUV420P;int numbytes = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, width, height, 1); handle->sws_data = (uint8_t *) av_malloc(numbytes * sizeof(uint8_t)); av_image_fill_arrays(handle->yuv_frame->data, handle->yuv_frame->linesize, handle->sws_data, AV_PIX_FMT_YUV420P, width, height, 1); }if (sws_scale(handle->sws_ctx, handle->decode_frame->data, handle->decode_frame->linesize, 0, height, // in handle->yuv_frame->data, handle->yuv_frame->linesize // out ) == 0) {fprintf(stderr, "Error in SWS Scale to YUV420P.");returnnullptr; } new_frame = handle->yuv_frame; }// copy Y datamemcpy(handle->yuv_buffer, new_frame->data[0], width * height);// Umemcpy(handle->yuv_buffer + width * height, new_frame->data[1], width * height / 4);// Vmemcpy(handle->yuv_buffer + width * height + width * height / 4, new_frame->data[2], width * height / 4);return handle->yuv_buffer;}#ifdef __cplusplus}#endif

编译指令

emccweb_decoder.cpp ./lib/libavformat.a \ ./lib/libavcodec.a \ ./lib/libswresample.a \ ./lib/libswscale.a \ ./lib/libavutil.a \ -I "./include" \ -s ALLOW_MEMORY_GROWTH=1 \ -s ENVIRONMENT=web \ -s MODULARIZE=1 \ -s EXPORT_ES6=1 \ -s USE_ES6_IMPORT_META=0 \ -s EXPORT_NAME='loadWebDecoder' \ --no-entry \ -o ./web_decoder.js

区别于上面的测试代码,这里我们要自己写页面来引入,所以只需要生成wasm和js文件即可

简单说明一下参数的意思:

-s ENVIRONMENT=web 表示我在web中使用会删除一些非web的全局功能

-s MODULARIZE=1 是会给你一个工程函数,返回一个Promise

-s EXPORT_ES6=1 启用ES6

-s USE_ES6_IMPORT_META=0 禁用import.meta.url

-s EXPORT_NAME 设置module的名字默认就是Module

前端部分

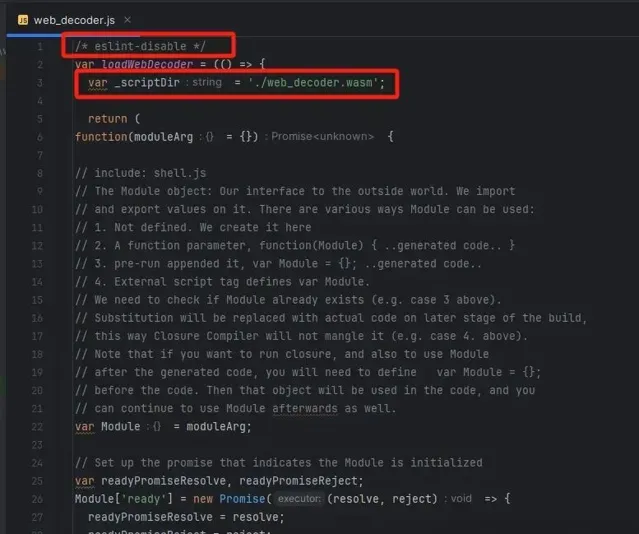

引入WASM

将web_decoder.wasm放到public目录下面,同时修改web_decoder.js

在第一行加上

/* eslint-disable */

然后修改

_scriptDir = './web_decoder.wasm'

在React中使用

这里是通过create-react-app创建的项目,下面是App.tsx的代码

import React, {useEffect, useRef, useState} from'react';import'./App.css';import {useWebsocket} from"./hooks/useWebsocket";import {useWasm} from"./hooks/useWasm";import loadWebDecoder from"./lib/web_decoder";import WebglScreen from"./lib/webgl_screen";exportconst WS_SUBSCRIBE_STREAM_DATA = '/user/topic/stream-data/real-time';exportconst WS_SEND_RTSP_URL = '/app/rtsp-url';exportconst STOMP_URL = '/stomp/endpoint';// export const WASM_URL = '/wasm/ffmpeg.wasm';const App = () => {const [rtspUrl, setRtspUrl] = useState<string>('rtsp://192.168.10.174:8554/rtsp/live1');const {connected, connect, subscribe, send} = useWebsocket({url: STOMP_URL});// const {loading, error, instance} = useWasm({url: WASM_URL});const [module, setModule] = useState<any>();const canvasRef = useRef<any>();let ptr: any;let webglScreen: any; useEffect(() => {if (!connected) { connect(); } loadWebDecoder().then((mod: any) => { setModule(mod); }) }, []);const onRtspUrlChange = (event: any) => { setRtspUrl(event.target.value); }const onOpen = () => {if (connected) { send(WS_SEND_RTSP_URL, {}, rtspUrl); subscribe(WS_SUBSCRIBE_STREAM_DATA, onReceiveData, {}); ptr = module._initDecoder();const canvas = canvasRef.current; canvas!.width = 960; canvas!.height = 480; webglScreen = new WebglScreen(canvas); } }const onReceiveData = (message: any) => {const data = JSON.parse(message.body);const buffer = newUint8Array(data);const length = buffer.length;console.log("receive pkt length :", length);const dst = module._GetBuffer(ptr, length); // 通知C/C++分配好一块内存用来接收JS收到的H264流.module.HEAPU8.set(buffer, dst); // 将JS层的数据传递给C/C++层.if (module._Decode(ptr, length) >= 0) {var width = module._GetWidth(ptr);var height = module._GetHeight(ptr);var size = width * height * 3 / 2;console.log("decode success, width:%d height:%d", width, height);const yuvData = module._GetRenderData(ptr); // 得到C/C++生成的YUV数据.// 将数据从C/C++层拷贝到JS层const renderBuffer = newUint8Array(module.HEAPU8.subarray(yuvData, yuvData + size + 1)); webglScreen.renderImg(width, height, renderBuffer) } else {console.log("decode fail"); } }return ( <div className="root"> <div className="header"> <h1>rtsp wasm player</h1> <div className="form"> <label>rtspUrl:</label> <input className="form-input" onChange={onRtspUrlChange} defaultValue={rtspUrl}/> <button className="form-btn" onClick={onOpen}>Open</button> </div> </div> <div className="context"> <canvas ref={canvasRef}/> </div> </div> );}export default App;

后端部分

拉流

通过javacv来完成拉流,下面是拉流并通过websocket传递原始数据的代码

package org.timothy.backend.service.runnable;import lombok.NoArgsConstructor;import lombok.extern.slf4j.Slf4j;import org.bytedeco.ffmpeg.avcodec.AVPacket;import org.bytedeco.ffmpeg.global.avcodec;import org.bytedeco.ffmpeg.global.avutil;import org.bytedeco.javacpp.BytePointer;import org.bytedeco.javacv.FFmpegFrameGrabber;import org.bytedeco.javacv.FFmpegLogCallback;import org.bytedeco.javacv.FrameGrabber.Exception;import org.springframework.messaging.simp.SimpMessagingTemplate;import java.util.Arrays;@NoArgsConstructor@Slf4jpublic classGrabTaskimplementsRunnable{private String rtspUrl;private String sessionId;private SimpMessagingTemplate simpMessagingTemplate;publicGrabTask(String sessionId, String rtspUrl, SimpMessagingTemplate simpMessagingTemplate){this.rtspUrl = rtspUrl;this.sessionId = sessionId;this.simpMessagingTemplate = simpMessagingTemplate; }boolean running = false;@Overridepublicvoidrun(){ log.info("start grab task sessionId:{}, steamUrl:{}", sessionId, rtspUrl); FFmpegFrameGrabber grabber = null; FFmpegLogCallback.set(); avutil.av_log_set_level(avutil.AV_LOG_INFO);try { grabber = new FFmpegFrameGrabber(rtspUrl); grabber.setOption("rtsp_transport", "tcp"); grabber.start(); running = true; AVPacket pkt;while (running) { pkt = grabber.grabPacket();// 过滤空包if (pkt == null || pkt.size() == 0 || pkt.data() == null) {continue; }byte[] buffer = newbyte[pkt.size()]; BytePointer data = pkt.data(); data.get(buffer);// log.info(Arrays.toString(buffer)); simpMessagingTemplate.convertAndSendToUser(sessionId, "/topic/stream-data/real-time", Arrays.toString(buffer)); avcodec.av_packet_unref(pkt); } } catch (Exception e) { running = false; log.info(e.getMessage()); } finally {try {if (grabber != null) { grabber.close(); grabber.release(); } } catch (Exception e) { log.info(e.getMessage()); } } }}

参考文章

https://zhuanlan.zhihu.com/p/399412573

https://blog.csdn.net/w55100/article/details/127541744

https://juejin.cn/post/7041485336350261278

https://juejin.cn/post/6844904008054751246