介绍

This model is a lightweight facedetection model designed for edge computing devices.

In terms of model size, the default FP32 precision (.pth) file size is 1.04~1.1MB, and the inference framework int8 quantization size is about 300KB. In terms of the calculation amount of the model, the input resolution of 320x240 is about 90~109 MFlops.

There are two versions of the model, version-slim (network backbone simplification,slightly faster) and version-RFB (with the modified RFB module, higher precision).

Widerface training pre-training model with different input resolutions of 320x240 and 640x480 is provided to better work in different application scenarios.

Support for onnx export for ease of migration and inference.

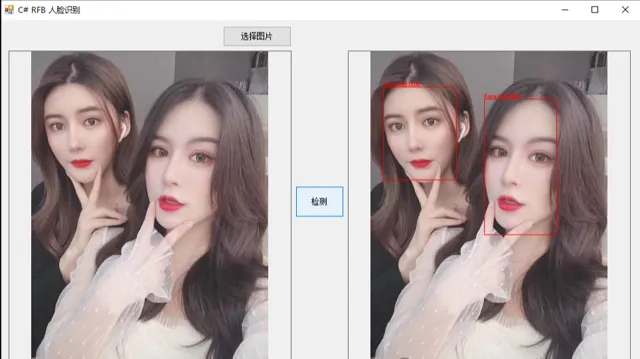

效果

模型信息

Inputs

-------------------------

name:input

tensor:Float[1, 3, 240, 320]

---------------------------------------------------------------

Outputs

-------------------------

name:batchno_ classid_score_x1y1x2y2

tensor:Float[-1, 7]

---------------------------------------------------------------

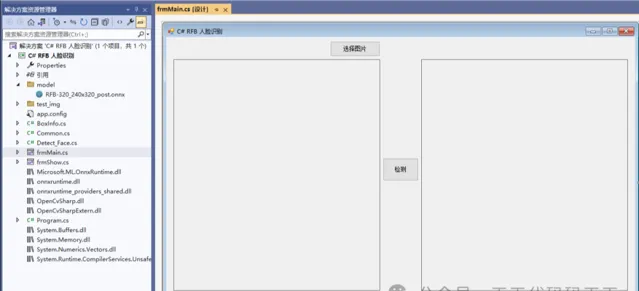

项目

VS2022

.net framework 4.8

OpenCvSharp 4.8

Microsoft.ML.OnnxRuntime 1.16.2

代码

using Microsoft.ML.OnnxRuntime.Tensors;

using Microsoft.ML.OnnxRuntime;

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Linq;

using System.Numerics;

using System.Text;

using System.Threading.Tasks;

namespace Onnx_Demo

{

public class Detect_Face

{

int num_proposal;

float confThreshold;

SessionOptions options;

InferenceSession onnx_session;

Tensor<float> input_tensor;

List<NamedOnnxValue> input_ontainer;

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> result_infer;

DisposableNamedOnnxValue[] results_onnxvalue;

Tensor<float> result_tensors;

float[] result_array;

int inpWidth = 320;

int inpHeight = 240;

public Detect_Face(float confThreshold)

{

this.confThreshold = confThreshold;

string model_path = "model/RFB-320_240x320_post.onnx";

// 输入Tensor

input_tensor = new DenseTensor<float>(new[] { 1, 3, 240, 320 });

// 创建输入容器

input_ontainer = new List<NamedOnnxValue>();

// 创建输出会话

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

options.AppendExecutionProvider_CPU(0);// 设置为CPU上运行

// 创建推理模型类,读取本地模型文件

onnx_session = new InferenceSession(model_path, options);

}

public List<BoxInfo> detect(Mat frame)

{

Mat dstimg = new Mat();

Cv2.CvtColor(frame, dstimg, ColorConversionCodes.BGR2RGB);

Cv2.Resize(dstimg, dstimg, new Size(inpWidth, inpHeight));

for (int y = 0; y < dstimg.Height; y++)

{

for (int x = 0; x < dstimg.Width; x++)

{

input_tensor[0, 0, y, x] = (float)((dstimg.At<Vec3b>(y, x)[0] - 127.5) / 127.5);

input_tensor[0, 1, y, x] = (float)((dstimg.At<Vec3b>(y, x)[1] - 127.5) / 127.5);

input_tensor[0, 2, y, x] = (float)((dstimg.At<Vec3b>(y, x)[2] - 127.5) / 127.5);

}

}

input_ontainer.Add(NamedOnnxValue.CreateFromTensor("input", input_tensor));

result_infer = onnx_session.Run(input_ontainer);

//将输出结果转为DisposableNamedOnnxValue数组

results_onnxvalue = result_infer.ToArray();

//读取第一个节点输出并转为Tensor数据

result_tensors = results_onnxvalue[0].AsTensor<float>();

num_proposal = result_tensors.Dimensions[0];

result_array = result_tensors.ToArray();

List<BoxInfo> bboxes = new List<BoxInfo>();

int n = 0; //batchno , classid , score , x1y1x2y2

for (n = 0; n < num_proposal; n++) //特征图尺度

{

float class_socre = result_array[n * 7 + 2];

if ( class_socre >= confThreshold)

{

int xmin = (int)(result_array[n * 7 + 3] * (float)frame.Cols);

int ymin = (int)(result_array[n * 7 + 4] * (float)frame.Rows);

int xmax = (int)(result_array[n * 7 + 5] * (float)frame.Cols);

int ymax = (int)(result_array[n * 7 + 6] * (float)frame.Rows);

bboxes.Add(new BoxInfo(xmin, ymin, xmax, ymax, class_socre, (int)result_array[n * 7 + 1]));

}

}

return bboxes;

}

public void drawPred(Mat frame, List<BoxInfo> bboxes)

{

foreach (BoxInfo box in bboxes)

{

Cv2.Rectangle(frame, new Point(box.x1, box.y1), new Point(box.x2, box.y2), new Scalar(0, 0, 255), 2);

string label = String.Format("Face:{0}", box.score.ToString("0.00"));

Cv2.PutText(frame, label, new Point(box.x1, box.y1 - 5), HersheyFonts.HersheySimplex, 0.75, new Scalar(0, 255, 0), 1);

}

}

}

}