計畫簡介

北京大學研究者們推出的Video-LLaVA模型,透過前沿技術,使大型語言模型能同時處理影像與視訊內容,推動多模態學習發展。此模型透過將視覺特征預先繫結至統一特征空間,提升了視覺資訊的理解與處理效率,特別是在視訊問答等套用中表現突出。相較傳統模型,Video-LLaVA透過結合影像和視訊訓練,有效提高了效能和效率。

掃碼加入交流群

獲得更多技術支持和交流

(請註明自己的職業)

特點

Video-LLaVA在數據集中缺少影像-視訊對的情況下,展示出了影像和視訊之間非凡的互動能力。

💡 簡單的基線,透過在投影之前的對齊來學習統一的視覺表示透過將統一的視覺表示與語言特征空間繫結,我們使一個大型語言模型(LLM)能夠同時對影像和視訊執行視覺推理能力。

🔥 高效能,視訊和影像的互補學習廣泛的實驗表明了模態間的互補性,與專為影像或視訊設計的模型相比,展現出顯著的優越性。

Demo

Gradio Web界面

強烈推薦透過以下命令嘗試網頁演示,該演示整合了Video-LLaVA目前支持的所有功能。

在Huggingface Spaces 也提供線上演示。

python -m videollava.serve.gradio_web_server

CLI 推理

CUDA_VISIBLE_DEVICES=0 python -m videollava.serve.cli --model-path "LanguageBind/Video-LLaVA-7B" --file "path/to/your/video.mp4" --load-4bit

CUDA_VISIBLE_DEVICES=0 python -m videollava.serve.cli --model-path "LanguageBind/Video-LLaVA-7B" --file "path/to/your/image.jpg" --load-4bit

主要結果

·

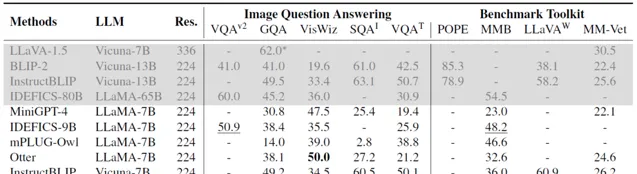

影像理解

· 視訊理解

安裝和要求

· Python >= 3.10

· Pytorch == 2.0.1

· CUDA Version >= 11.7

· 安裝所需的包:

git clone https://github.com/PKU-YuanGroup/Video-LLaVAcd Video-LLaVAconda create -n videollava python=3.10 -yconda activate videollavapip install --upgrade pip # enable PEP 660 supportpip install -e .pip install -e ".[train]"pip install flash-attn --no-build-isolationpip install decord opencv-python git+https://github.com/facebookresearch/pytorchvideo.git@28fe037d212663c6a24f373b94cc5d478c8c1a1d

API

如果想在本地載入模型,可以使用如下程式碼

影像推理

import torchfrom videollava.constants import IMAGE_TOKEN_INDEX, DEFAULT_IMAGE_TOKENfrom videollava.conversation import conv_templates, Separator stylefrom videollava.model.builder import load_pretrained_modelfrom videollava.utils import disable_torch_initfrom videollava.mm_utils import tokenizer_image_token, get_model_name_from_path, KeywordsStoppingCriteriadef main():disable_torch_init() image = 'videollava/serve/examples/extreme_ironing.jpg' inp = 'What is unusual about this image?' model_path = 'LanguageBind/Video-LLaVA-7B' cache_dir = 'cache_dir' device = 'cuda' load_4bit, load_8bit = True, False model_name = get_model_name_from_path(model_path) tokenizer, model, processor, _ = load_pretrained_model(model_path, None, model_name, load_8bit, load_4bit, device=device, cache_dir=cache_dir) image_processor = processor['image'] conv_mode = "llava_v1" conv = conv_templates[conv_mode].copy() roles = conv.roles image_tensor = image_processor.preprocess(image, return_tensors='pt')['pixel_values']if type(image_tensor) is list: tensor = [image.to(model.device, dtype=torch.float16) for image in image_tensor]else: tensor = image_tensor.to(model.device, dtype=torch.float16) print(f"{roles[1]}: {inp}") inp = DEFAULT_IMAGE_TOKEN + '\n' + inp conv.append_message(conv.roles[0], inp) conv.append_message(conv.roles[1], None) prompt = conv.get_prompt() input_ids = tokenizer_image_token(prompt, tokenizer, IMAGE_TOKEN_INDEX, return_tensors='pt').unsqueeze(0).cuda() stop_str = conv.sep if conv.sep_ style != Separator style.TWO else conv.sep2 keywords = [stop_str] stopping_criteria = KeywordsStoppingCriteria(keywords, tokenizer, input_ids) with torch.inference_mode(): output_ids = model.generate( input_ids, images=tensor, do_sample=True, temperature=0.2, max_new_tokens=1024, use_cache=True, stopping_criteria=[stopping_criteria]) outputs = tokenizer.decode(output_ids[0, input_ids.shape[1]:]).strip() print(outputs)if __name__ == '__main__': main()

視訊推理

import torchfrom videollava.constants import IMAGE_TOKEN_INDEX, DEFAULT_IMAGE_TOKENfrom videollava.conversation import conv_templates, Separator stylefrom videollava.model.builder import load_pretrained_modelfrom videollava.utils import disable_torch_initfrom videollava.mm_utils import tokenizer_image_token, get_model_name_from_path, KeywordsStoppingCriteriadef main():disable_torch_init() video = 'videollava/serve/examples/sample_demo_1.mp4' inp = 'Why is this video funny?' model_path = 'LanguageBind/Video-LLaVA-7B' cache_dir = 'cache_dir' device = 'cuda' load_4bit, load_8bit = True, False model_name = get_model_name_from_path(model_path) tokenizer, model, processor, _ = load_pretrained_model(model_path, None, model_name, load_8bit, load_4bit, device=device, cache_dir=cache_dir) video_processor = processor['video'] conv_mode = "llava_v1" conv = conv_templates[conv_mode].copy() roles = conv.roles video_tensor = video_processor(video, return_tensors='pt')['pixel_values']if type(video_tensor) is list: tensor = [video.to(model.device, dtype=torch.float16) for video in video_tensor]else: tensor = video_tensor.to(model.device, dtype=torch.float16) print(f"{roles[1]}: {inp}") inp = ' '.join([DEFAULT_IMAGE_TOKEN] * model.get_video_tower().config.num_frames) + '\n' + inp conv.append_message(conv.roles[0], inp) conv.append_message(conv.roles[1], None) prompt = conv.get_prompt() input_ids = tokenizer_image_token(prompt, tokenizer, IMAGE_TOKEN_INDEX, return_tensors='pt').unsqueeze(0).cuda() stop_str = conv.sep if conv.sep_ style != Separator style.TWO else conv.sep2 keywords = [stop_str] stopping_criteria = KeywordsStoppingCriteria(keywords, tokenizer, input_ids) with torch.inference_mode(): output_ids = model.generate( input_ids, images=tensor, do_sample=True, temperature=0.1, max_new_tokens=1024, use_cache=True, stopping_criteria=[stopping_criteria]) outputs = tokenizer.decode(output_ids[0, input_ids.shape[1]:]).strip() print(outputs)if __name__ == '__main__': main()

計畫連結

https://github.com/PKU-YuanGroup/Video-LLaVA

關註「 開源AI計畫落地 」公眾號