介紹

github地址:

https://github.com/xuebinqin/U-2-Net

The code for our newly accepted paper in Pattern Recognition 2020: "U^2-Net: Going Deeper with Nested U-Structure for Salient Object Detection."

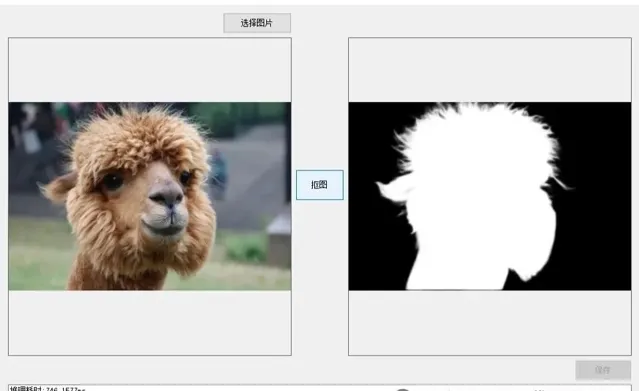

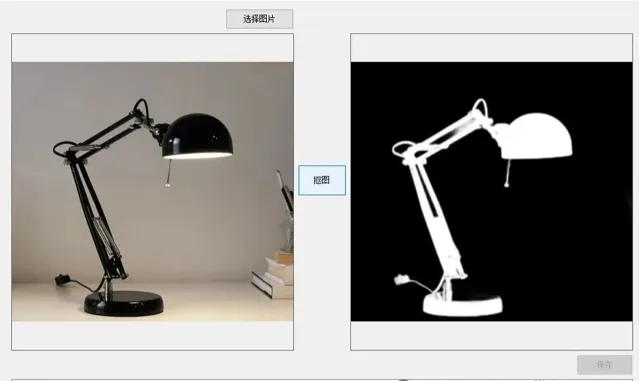

效果(u2net.onnx)

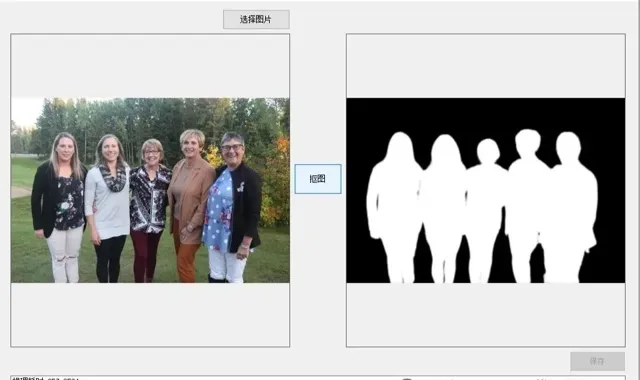

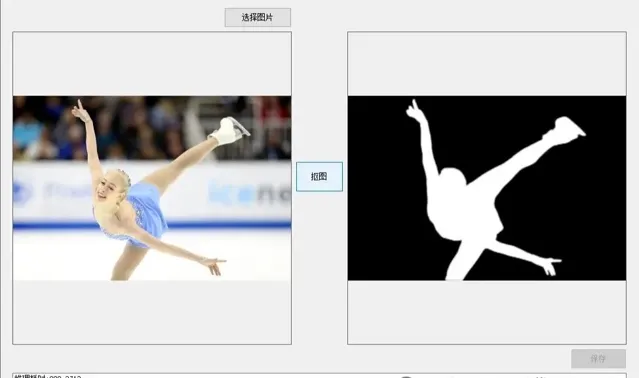

效果(u2net_human_seg.onnx)

模型資訊

u2net.onnx

Inputs

-------------------------

name:input_image

tensor:Float[1, 3, 320, 320]

---------------------------------------------------------------

Outputs

-------------------------

name:output_image

tensor:Float[1, 1, 320, 320]

name:2016

tensor:Float[1, 1, 320, 320]

name:2017

tensor:Float[1, 1, 320, 320]

name:2018

tensor:Float[1, 1, 320, 320]

name:2019

tensor:Float[1, 1, 320, 320]

name:2020

tensor:Float[1, 1, 320, 320]

name:2021

tensor:Float[1, 1, 320, 320]

---------------------------------------------------------------

u2net_human_seg.onnx

Inputs

-------------------------

name:input_image

tensor:Float[1, 3, 320, 320]

---------------------------------------------------------------

Outputs

-------------------------

name:output_image

tensor:Float[1, 1, 320, 320]

name:2016

tensor:Float[1, 1, 320, 320]

name:2017

tensor:Float[1, 1, 320, 320]

name:2018

tensor:Float[1, 1, 320, 320]

name:2019

tensor:Float[1, 1, 320, 320]

name:2020

tensor:Float[1, 1, 320, 320]

name:2021

tensor:Float[1, 1, 320, 320]

---------------------------------------------------------------

計畫

VS2022

.net framework 4.8

OpenCvSharp 4.8

Microsoft.ML.OnnxRuntime 1.16.2

程式碼

using Microsoft.ML.OnnxRuntime;

using Microsoft.ML.OnnxRuntime.Tensors;

using OpenCvSharp;

using System;

using System.Collections.Generic;

using System.Drawing;

using System.Drawing.Imaging;

using System.Linq;

using System.Threading.Tasks;

using System.Windows.Forms;

namespace U2Net

{

public partial class frmMain : Form

{

public frmMain()

{

InitializeComponent();

}

string fileFilter = "*.*|*.bmp;*.jpg;*.jpeg;*.tiff;*.tiff;*.png";

string image_path = "";

string startupPath;

DateTime dt1 = DateTime.Now;

DateTime dt2 = DateTime.Now;

string model_path;

Mat image;

Mat result_image;

int modelSize = 512;

SessionOptions options;

InferenceSession onnx_session;

Tensor<float> input_tensor;

List<NamedOnnxValue> input_ontainer;

IDisposableReadOnlyCollection<DisposableNamedOnnxValue> result_infer;

DisposableNamedOnnxValue[] results_onnxvalue;

Tensor<float> result_tensors;

float[] result_array;

private void button1_Click(object sender, EventArgs e)

{

OpenFileDialog ofd = new OpenFileDialog();

ofd.Filter = fileFilter;

if (ofd.ShowDialog() != DialogResult.OK) return;

pictureBox1.Image = null;

image_path = ofd.FileName;

pictureBox1.Image = new Bitmap(image_path);

textBox1.Text = "";

image = new Mat(image_path);

pictureBox2.Image = null;

}

private void button2_Click(object sender, EventArgs e)

{

if (image_path == "")

{

return;

}

textBox1.Text = "";

pictureBox2.Image = null;

int oldwidth = image.Cols;

int oldheight = image.Rows;

//縮放圖片大小

int maxEdge = Math.Max(image.Rows, image.Cols);

float ratio = 1.0f * modelSize / maxEdge;

int newHeight = (int)(image.Rows * ratio);

int newWidth = (int)(image.Cols * ratio);

Mat resize_image = image.Resize(new OpenCvSharp.Size(newWidth, newHeight));

int width = resize_image.Cols;

int height = resize_image.Rows;

if (width != modelSize || height != modelSize)

{

resize_image = resize_image.CopyMakeBorder(0, modelSize - newHeight, 0, modelSize - newWidth, BorderTypes.Constant, new Scalar(255, 255, 255));

}

Cv2.CvtColor(resize_image, resize_image, ColorConversionCodes.BGR2RGB);

for (int y = 0; y < resize_image.Height; y++)

{

for (int x = 0; x < resize_image.Width; x++)

{

input_tensor[0, 0, y, x] = (resize_image.At<Vec3b>(y, x)[0] / 255f - 0.485f) / 0.229f;

input_tensor[0, 1, y, x] = (resize_image.At<Vec3b>(y, x)[1] / 255f - 0.456f) / 0.224f;

input_tensor[0, 2, y, x] = (resize_image.At<Vec3b>(y, x)[2] / 255f - 0.406f) / 0.225f;

}

}

//將 input_tensor 放入一個輸入參數的容器,並指定名稱

input_ontainer.Add(NamedOnnxValue.CreateFromTensor("input_image", input_tensor));

dt1 = DateTime.Now;

//執行 Inference 並獲取結果

result_infer = onnx_session.Run(input_ontainer);

dt2 = DateTime.Now;

//將輸出結果轉為DisposableNamedOnnxValue陣列

results_onnxvalue = result_infer.ToArray();

//讀取第一個節點輸出並轉為Tensor數據

result_tensors = results_onnxvalue[0].AsTensor<float>();

result_array = result_tensors.ToArray();

//黑白色反轉

//for (int i = 0; i < result_array.Length; i++)

//{

// result_array[i] = 1 - result_array[i];

//}

float maxVal = result_array.Max();

float minVal = result_array.Min();

for (int i = 0; i < result_array.Length; i++)

{

result_array[i] = (result_array[i] - minVal) / (maxVal - minVal) * 255;

}

result_image = new Mat(modelSize, modelSize, MatType.CV_32F, result_array);

Cv2.CvtColor(result_image, result_image, ColorConversionCodes.RGB2BGR);

//還原影像大小

if (width != modelSize || height != modelSize)

{

Rect rect = new Rect(0, 0, width, height);

result_image = result_image.Clone(rect);

}

result_image = result_image.Resize(new OpenCvSharp.Size(oldwidth, oldheight));

pictureBox2.Image = new Bitmap(result_image.ToMemoryStream());

textBox1.Text = "推理耗時:" + (dt2 - dt1).TotalMilliseconds + "ms";

}

private void Form1_Load(object sender, EventArgs e)

{

startupPath = Application.StartupPath;

//model_path = startupPath + "\\model\\u2net.onnx";

//model_path = startupPath + "\\model\\u2netp.onnx";

model_path = startupPath + "\\model\\u2net_human_seg.onnx";

modelSize = 320;

//建立輸出會話,用於輸出模型讀取資訊

options = new SessionOptions();

options.LogSeverityLevel = OrtLoggingLevel.ORT_LOGGING_LEVEL_INFO;

//設定為CPU上執行

options.AppendExecutionProvider_CPU(0);

//建立推理模型類,讀取本地模型檔

onnx_session = new InferenceSession(model_path, options);

//建立輸入容器

input_ontainer = new List<NamedOnnxValue>();

//輸入Tensor

input_tensor = new DenseTensor<float>(new[] { 1, 3, modelSize, modelSize });

}

private void button3_Click(object sender, EventArgs e)

{

if (pictureBox2.Image == null)

{

return;

}

Bitmap output = new Bitmap(pictureBox2.Image);

var sdf = new SaveFileDialog();

sdf.Title = "保存";

sdf.Filter = "Images (*.bmp)|*.bmp|Images (*.emf)|*.emf|Images (*.exif)|*.exif|Images (*.gif)|*.gif|Images (*.ico)|*.ico|Images (*.jpg)|*.jpg|Images (*.png)|*.png|Images (*.tiff)|*.tiff|Images (*.wmf)|*.wmf";

if (sdf.ShowDialog() == DialogResult.OK)

{

switch (sdf.FilterIndex)

{

case 1:

{

output.Save(sdf.FileName, ImageFormat.Bmp);

break;

}

case 2:

{

output.Save(sdf.FileName, ImageFormat.Emf);

break;

}

case 3:

{

output.Save(sdf.FileName, ImageFormat.Exif);

break;

}

case 4:

{

output.Save(sdf.FileName, ImageFormat.Gif);

break;

}

case 5:

{

output.Save(sdf.FileName, ImageFormat.Icon);

break;

}

case 6:

{

output.Save(sdf.FileName, ImageFormat.Jpeg);

break;

}

case 7:

{

output.Save(sdf.FileName, ImageFormat.Png);

break;

}

case 8:

{

output.Save(sdf.FileName, ImageFormat.Tiff);

break;

}

case 9:

{

output.Save(sdf.FileName, ImageFormat.Wmf);

break;

}

}

MessageBox.Show("保存成功,位置:" + sdf.FileName);

}

}

}

}